Amazon’s AI research initiative provides high-performance computing access to accelerate ML advancements, foster AI innovation & democratise research.

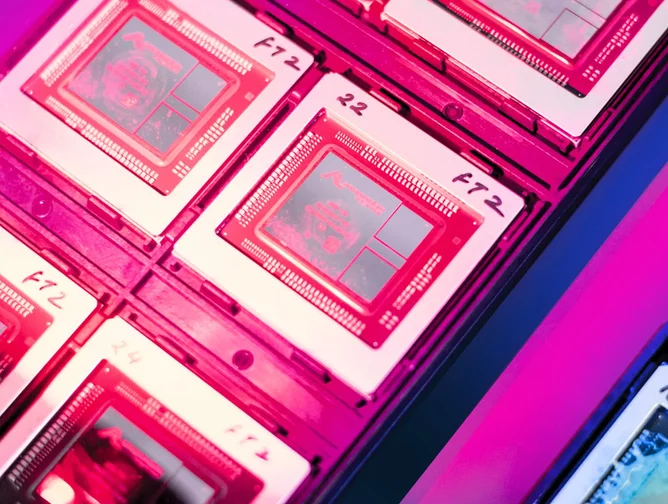

Amazon is investing US$110m in AI research through its new Build on Trainium programme, which offers university-led research teams access to powerful Amazon Web Services (AWS) Trainium chips and high-performance computing infrastructure.

The initiative aims to accelerate the development of next-generation machine learning (ML) models, particularly in the rapidly evolving field of Gen AI.

By providing resources to academic institutions, the programme seeks to foster innovation in AI architecture, performance optimisation and large-scale machine learning research.

This investment reflects Amazon’s broader push to support global AI advancements while making high-performance computing more accessible to researchers worldwide.

New opportunities for AI research

Over the past few years, the growing demand for more advanced AI models – especially in the field of Gen AI – has put immense pressure on research institutions.

Cutting-edge AI development requires vast computational resources, but many universities face budget constraints that limit their ability to access the high-performance infrastructure necessary for large-scale machine learning experiments.

To address this gap, AWS launched the Build on Trainium programme.

This initiative provides university researchers with free access to AWS’s Trainium UltraClusters, which are networks of high-performance AI chips designed specifically for deep learning training and inference.

AWS Trainium chips are optimised for the computationally intensive workloads required to train large-scale AI models, making them a critical resource for advancing AI research.

Through the Build on Trainium programme, researchers will gain access to not only these powerful chips but also the associated infrastructure, which is crucial for building and testing complex machine learning models.

With these resources, institutions can explore new AI architectures, develop performance optimisation techniques, and push the boundaries of current ML capabilities.

Enhancing AI research with compute power

At the heart of the Build on Trainium programme is the creation of a dedicated Trainium research UltraCluster, which combines up to 40,000 Trainium chips in a high-performance network.

This computational power enables researchers to run large-scale experiments and test sophisticated models in a way that would not be feasible with traditional hardware.

By offering access to such infrastructure, AWS aims to level the playing field for academic researchers who might otherwise struggle to afford the compute resources necessary to make breakthroughs in AI.

The programme also includes Amazon Research Awards, which offer grants that provide AWS credits for Trainium and access to the UltraClusters.

These grants are intended to support a wide range of AI research, from basic algorithm development to large-scale systems optimisation.

Amazon’s involvement with leading academic institutions – such as Carnegie Mellon University (CMU) in Pittsburgh – illustrates the programme’s potential to enhance the quality and scope of AI research worldwide.

At CMU, a prominent research group known as Catalyst is using the AWS resources to investigate new machine learning techniques, including compiler optimisations for AI systems.

Todd C. Mowry, a Professor at CMU, explained that the Build on Trainium programme gives researchers the flexibility to experiment with modern AI accelerators and explore areas such as machine learning parallelisation and optimisation for language models.

According to Todd: “AWS’s Build on Trainium initiative enables our faculty and students large-scale access to modern accelerators, like AWS Trainium, with an open programming model.

“It allows us to greatly expand our research on tensor program compilation, ML parallelisation and language model serving and tuning”.

For many academic institutions, obtaining high-performance computing power has been a barrier to more ambitious AI research.

The costs associated with purchasing and maintaining these kinds of resources are often prohibitive.

By lowering these barriers, the Build on Trainium programme provides a unique opportunity for universities to access the technology needed to advance their work without the significant financial burden.

Building a global AI research community

While the programme offers valuable hardware, its broader goal is to foster collaboration across the global AI research community.

Researchers participating in Build on Trainium will not only have access to advanced computing resources but also have opportunities to publish their findings and contribute to open-source machine learning libraries.

This open-source focus is a key element of the programme, designed to ensure that AI advancements are shared widely and remain accessible to researchers worldwide.

Christopher Fletcher, an Associate Professor at the University of California, Berkeley and a participant in the programme, highlights the flexibility of the AWS

Trainium platform, explaining: “The knobs of flexibility built into the architecture at every step make it a dream platform from a research perspective.”

The flexibility of the platform is further enhanced by the introduction of the Neuron Kernel Interface (NKI), a programming interface that provides direct access to the instruction set of the AWS Trainium chip.

This allows researchers to build optimised computational kernels for their AI models, increasing the efficiency and performance of their experiments.

NKI gives AI developers more control over their hardware, which is uncommon in cloud-based environments, making it an important tool for those working on advanced machine learning systems.

Participants in the Build on Trainium programme will also have access to educational resources and technical support through AWS’s Neuron Data Science community.

As Christopher concludes: “Trainium is beyond programmable – not only can you run a program, you get low-level access to tune features of the hardware itself”.